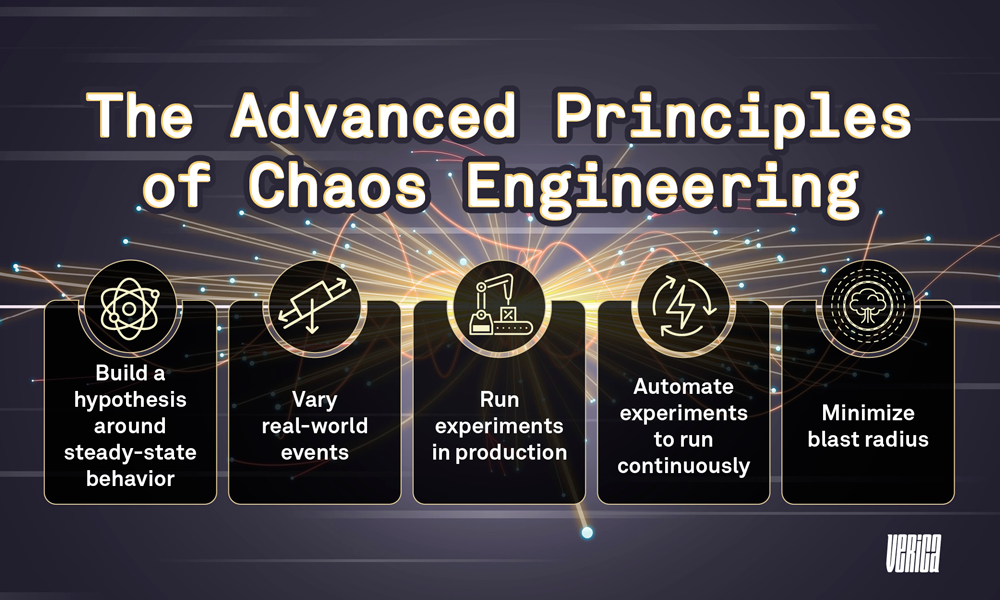

The Advanced Principles of Chaos Engineering

In our previous post, “What Chaos Engineering Is (and Isn’t)” we laid out the key principles behind the practice. Chaos Engineering is grounded in empiricism, experimentation over testing, and verification over validation. But not all experimentation is equally valuable. The principles of Chaos Engineering extend to a “gold standard” captured in a set of advanced principles:

- Build a hypothesis around steady-state behavior

- Vary real-world events

- Run experiments in production

- Automate experiments to run continuously

- Minimize blast radius

Build a Hypothesis Around Steady-State Behavior

Every experiment begins with a hypothesis. For availability experiments, the form of the experiment is usually:

Under ______ circumstances, customers still have a good time.

For security experiments by contrast, the form of the experiment is usually:

Under ______ circumstances, the security team is notified.

In both cases, the blank space is filled in by the variables you determine. The advanced principles emphasize building your hypothesis around a steady-state definition. This means focusing on the way the system is expected to behave, and capturing that in a measurement. In the preceding examples, customers presumably have a good time by default, and security usually gets notified when something violates a security control.

This focus on steady state forces engineers to step back from the code and focus on the holistic output. It captures Chaos Engineering’s bias toward verification over validation. We often have an urge to dive into a problem, find the “root cause” of a behavior, and try to understand a system via reductionism. Doing a deep dive can help with exploration, but it is a distraction from the best learning that Chaos Engineering can offer. At its best, Chaos Engineering is focused on key performance indicators (KPIs) or other metrics like SLOs that track with clear business priorities, as those make for the best steady-state definitions.

Vary Real-World Events

This advanced principle states that the variables in experiments should reflect real world events. While this might seem obvious in hindsight, there are two good reasons for explicitly calling this out:

- Variables are often chosen for what is easy to do rather than what provides the most learning value.

- Engineers have a tendency to focus on variables that reflect their experience rather than the users’ experience.

Run Experiments in Production

Experimentation teaches you about the system you are studying. If you are experimenting in a Staging environment, then you are building confidence in that environment.

To the extent that the Staging and Production environments differ, often in ways that a human cannot predict, you are not building confidence in the environment that you really care about. For this reason, the most advanced Chaos Engineering takes place in Production.

This principle is not without controversy. Certainly in some fields there are regulatory requirements that preclude the possibility of affecting the Production systems. In some situations there are insurmountable technical barriers to running experiments in Production. It is important to remember that the point of Chaos Engineering is to uncover the chaos inherent in complex systems, not to cause it. If we know that an experiment is going to generate an undesirable outcome, then we should not run that experiment. This is especially important guidance in a Production environment where the repercussions of a disproved hypothesis can be high.

As an advanced principle, there is no all-or-nothing value proposition to running experiments in Production. In most situations, it makes sense to start experimenting on a Staging system, and gradually move over to Production once the kinks of the tooling are worked out. In many cases, critical insights into Production are discovered first by Chaos Engineering on Staging.

Automate Experiments to Run Continuously

This principle recognizes a practical implication of working on complex systems.

Automation is important in such systems for two reasons:

- To cover a larger set of experiments than humans can cover manually. In complex systems, the conditions that could possibly contribute to an incident are so numerous that they can’t be planned for. In fact, they can’t even be counted because they are unknowable in advance. This means that humans can’t reliably search the solution space of possible contributing factors in a reasonable amount of time. Automation provides a means to scale out the search for vulnerabilities that could contribute to undesirable systemic outcomes.

- To empirically verify our assumptions over time, as unknown parts of the system are changed. Imagine a system where the functionality of a given component relies on other components outside of the scope of the primary operators. This is the case in almost all complex systems. Without tight coupling between the given functionality and all the dependencies, it is entirely possible that one of the dependencies will change in such a way that it creates a vulnerability.

Continuous experimentation provided by automation can catch these issues and teach the primary operators about how the operation of their own system is changing over time. This could be a change in performance (e.g. the network is becoming saturated by noisy neighbors) or a change in functionality (e.g. the response bodies of downstream services are including extra information that could impact how they are parsed) or a change in human expectations (e.g., the original engineers leave the team, and the new operators are not as familiar with the code).

Automation itself can have unintended consequences. The advanced principles maintain that automation is an advanced mechanism to explore the solution space of potential vulnerabilities, and to reify institutional knowledge about vulnerabilities by verifying a hypothesis over time knowing that complex systems will Change.

Minimize Blast Radius

This final advanced principle was added to the original principles after the Chaos Engineering team at Netflix found that they could significantly reduce the risk to Production traffic by engineering safer ways to run experiments. By using a tightly orchestrated control group to compare with a variable group, experiments can be constructed in such a way that the impact of a disproved hypothesis on customer traffic in Production is minimal.

How a team goes about achieving this is highly context-sensitive to the complex system at hand. In some systems it may mean using shadow traffic; or excluding requests that have high business impact like transactions over $100; or implementing automated retry logic for requests in the experiment that fail. In the case of the Chaos Team’s work at Netflix, sampling of requests, sticky sessions, and similar functions not only limited the blast radius; they had the added benefit of strengthening signal detection, since the metrics of a small variable group can often stand out starkly in contrast to a small control group. However it is

achieved, this advanced principle emphasizes that in truly sophisticated implementations of Chaos Engineering, the potential impact of an experiment can be limited by design.

All of these advanced principles are presented to guide and inspire, not to dictate. They are born of pragmatism and should be adopted (or not) with that aim in mind.

The Future of the Principles of Chaos Engineering

In the five years since the Principles were published, we have seen Chaos Engineering evolve to meet new challenges in new industries. The principles and foundation of the practice are sure to continue to evolve as adoption expands through the software industry and into new verticals.

When Netflix first started evangelizing Chaos Engineering at Chaos Community Day in earnest in 2015, they received a lot of pushback from financial institutions in particular. The common concern was, “Sure, maybe this works for an entertainment service or online advertising, but we have real money on the line.” To which the Chaos Team responded, “Do you have outages?”

Of course, the answer is “yes”; even the best engineering teams suffer outages at high stakes financial institutions. This left two options, according to the Chaos Team: either (a) continue having outages at some unpredictable rate and severity, or (b) adopt a proactive strategy like Chaos Engineering to understand risks in order to prevent large, uncontrolled outcomes. Financial institutions agreed, and many of the world’s largest banks now have dedicated Chaos Engineering programs.

The next industry to voice concerns with the concept was healthcare. The concern was expressed as, “Sure, maybe this works for online entertainment or financial services, but we have human lives on the line.” Again, the Chaos Team responded, “Do you have outages?”

But in this case, even more direct appeal can be made to the basis of healthcare as a system. When empirical experimentation was chosen as the basis of Chaos Engineering, it was a direct appeal to Karl Popper’s concept of falsifiability, which provides the foundation for Western notions of science and the scientific method. The pinnacle of Popperian notions in practice is the clinical trial.

In this sense, the phenomenal success of the Western healthcare system is built on Chaos Engineering. Modern medicine depends on double-blind experiments with human lives on the line. They just call it by a different name: the clinical trial. Forms of Chaos Engineering have implicitly existed in many other industries for a long time. Bringing experimentation to the forefront, particularly in the software practices within other industries, gives power to the practice. Calling these out and explicitly naming it Chaos Engineering allows us to strategize about its purpose and application, and take lessons learned from other fields and apply them to our own.

In that spirit, we can explore Chaos Engineering in industries and companies that look very different from the prototypical microservice-at-scale examples commonly associated with the practice. FinTech, Autonomous Vehicles (AV), and Adversarial Machine Learning can teach us about the potential and the limitations of Chaos Engineering.

Mechanical Engineering and Aviation expand our understanding even further, taking us outside the realm of software into hardware and physical prototyping.

Chaos Engineering has even expanded beyond availability into security, which is the other side of the coin from a system safety perspective. All of these new industries, use cases, and environments will continue to evolve the foundation and principles of Chaos Engineering.

Casey Rosenthal

Casey Rosenthal was formerly the Engineering Manager of the Chaos Engineering Team at Netflix. His superpower is transforming misaligned teams into high-performance teams, and his personal mission is to help people see that something different, something better, is possible. For fun, he models human behavior using personality profiles in Ruby, Erlang, Elixir, and Prolog.

If you liked this article, we’d love to schedule some time

to talk with you about the Verica Continuous Verification Platform

and how it can help your business.