Security Chaos Engineering: How to Security Differently

“The growth of complexity in society has got ahead of our understanding of how complex systems work and fail. And when such complexity fails, we still apply simple, linear, componential ideas as if that will help us understand what went wrong.”

—Sidney Dekker, Drift Into Failure1

Rapid technological innovation has presented businesses unique opportunities to unlock new value. And yet, cyber security is failing to keep up with these revolutionary changes. We’re still trying to solve today’s security challenges with yesterday’s antiquated solutions, in the midst of a global shortage of qualified practitioners. On top of that, breaches are happening more than ever, despite increases in spending on security tools and solutions. These solutions aren’t helping companies rapidly detect, recognize, and respond to rapidly changing relationships and threats. In part, this is due to “Maginot thinking” which relies heavily on walls, rigidity, construction, compliance, blaming people, and non-transparency (or hyper-secrecy). Further contributing to all these factors is that cyber security organizations are incentivized and funded to prioritize regulatory compliance over seeking high impact security outcomes aligned with business goals.

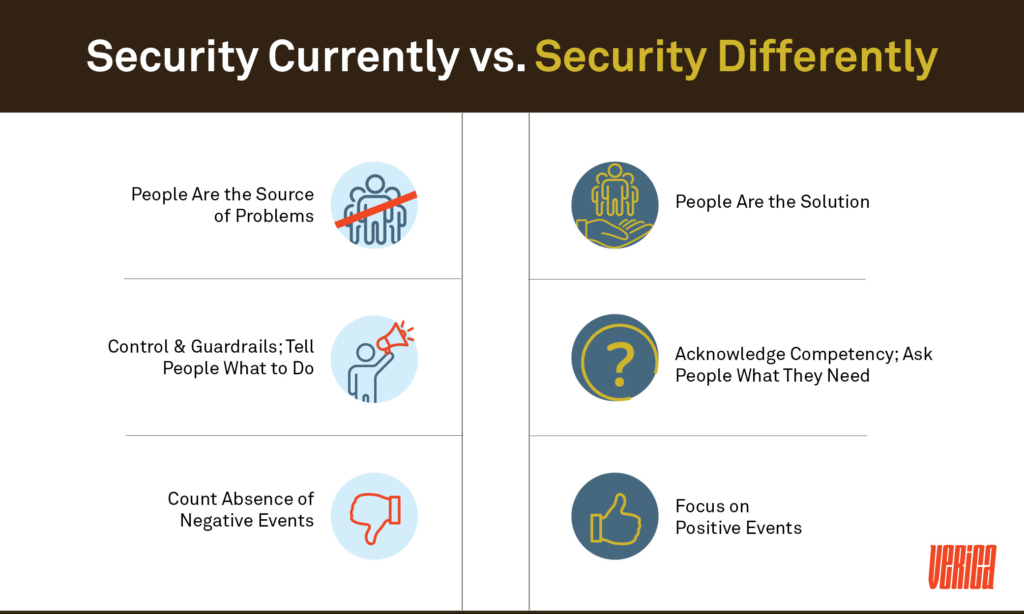

This tangled web of problems that plague traditional security practices is underscored by three main factors:

- Managers view workers as the cause of poor security. Workers make mistakes, they violate rules, which ultimately makes security metrics look bad. That is, workers represent a problem that an organization needs to solve, instead of dealing with the underlying phenomena of security issues.

- Because of this, organizations intervene to try and influence workers’ behavior. Managers develop strict guidelines and tell workers what to do, because they cannot be trusted to operate securely on their own.

- Organizations measure their security success through the absence of negative events.

In order to advance our capability to understand how humans can securely interact within complex systems, we need to change the way we regard the work being performed by the humans that keeps us secure. Developing rigid top-down procedures, processes, and methods hinges on an assumption that the people developing those mechanisms have an accurate mental model of the entire system they intend to secure. But it is impossible for a human to mentally model a complex system and the only way to understand its behavior is to interact with it.2

This underscores the need for a bottom-up approach: the only people with the appropriate context to understand the complex system are the ones that are interacting with it every day. You can learn a lot more about how security is successfully accomplished by studying and improving the capacity of the daily frontline workers actively performing their tasks than through tracking violations of security rules and policy.

Security Differently challenges the traditional paradigm by flipping traditional thinking on its head, and encourages organizations to grow security from the bottom up, rather than impose it from the top down. The ideas in this post translate 70+ years in research, practices, and lessons learned from the field of Safety Engineering to Cyber Security, largely informed by Sidney Dekker’s Safety Differently lecture series. In his model, the earlier factors of current security practices should be inverted:

- People are not the problem to control; workers are the solution. Learn how your people create success on a daily basis and harness their skills and competencies to build more secure workplaces and systems.

- Rather than intervening in worker behavior, intervene in the conditions of people’s work. This involves collaborating with front-line staff and providing them with the right tools, environment, and culture to get the job done securely.

- Measure security as the presence of positive capacities. If you want to stop things from going wrong, enhance the capacities that make things go right.

People Are the Source of Solutions, Not Problems

“People do not come to work to do a bad job.”

—Sidney Dekker, The Field Guide to Understanding ‘Human Error’3

“Human error” is the favored bugaboo of security incidents, often listed as a major contributing factor or the “cause” of an incident or accident. This is fueled by a belief that humans are unreliable, and solutions to “human error” problems should involve changing the people or their role in the system (often by simply removing them). This is a dangerously narrow and misguided view. First, the notion of “human error” relies on hindsight that lends credence to considering a person’s otherwise normal predictions, actions, and assessments problematic. Second, people operating in complex environments or systems (those at the so-called “sharp end”) are necessarily aware of potential paths to failure, and develop strategies to avoid or forestall those negative outcomes. Daily, they adapt to constantly changing and evolving conditions inherent to complex systems.

Punishing or even getting rid of someone who was involved in a problem does not get rid of the conditions that gave rise to the trouble they got into. The idea of humans being the source of our collective security demise becomes even less credible when you consider the fact that security is a human construct. No system is secure by default, it requires humans to create it for it to exist—in turn, humans are the primary mechanism that keeps us secure.

It’s time for the security world to start taking human performance, instead of human error, seriously. The people building and securing modern, complex systems are at the sharp end of the wedge: they tackle the messy daily work filled with trade-offs, goal conflicts, time pressure, uncertainty, ambiguity, vague goals, high stakes, organizational constraints, and team coordination requirements. They are also responsible for all the times that your systems work as intended, and are secure and available. They keep incidents from happening more often, and prevent them from being worse when they do happen. Security Differently embraces their expertise and adaptive capacity, and gives them the tools and environment in which to best operate.

Competency and Trust Instead of Control and Compliance

As system safety researcher David Woods points out in his work on Graceful Extensibility, failure in complex systems is due to brittleness, not to component failure (which is often the focus of defensive security). Brittleness is the opposite of resilience, it is a sudden collapse or failure when events push the system up to and beyond its boundaries for handling changing disturbances and variations. Brittleness also reflects how rapidly a system’s performance declines when it nears and reaches its boundaries. Non-brittle systems demonstrate adaptive capacity, or the potential for modifying what worked in the past to meet challenges in the future. Adaptive capacity is a relationship between changing demands and responsiveness to those demands, relative to the organization’s goals. Increased rules, guardrails, and heavy-handed policies all serve to increase brittleness and reduce adaptive capacity. In short, they make you more likely to have, and be less able to respond to, severe events like security incidents.

If, as described above, you acknowledge that the people that build, maintain, and secure your systems have the most knowledge about them and are therefore poised to deal with perturbations, you must ensure they are able to act and adapt. In Woods’ words:

“Adaptation can mean continuing to work to plan, but, and this is a very important but, with the continuing ability to re-assess whether the plan fits the situation confronted—even as evidence about the nature of the situation changes and evidence about the effects of interventions changes. Adaptation is not about always changing the plan or previous approaches, but about the potential to modify plans to fit situations—being poised to adapt.”4

Reactive countermeasures that create more guardrails and hinder operators’ ability to act will only increase the brittleness of your systems, hence impacting their security as well. Inevitably, designing security to prevent, detect, and remediate based solely on the presence of security failure without attempting to resolve our indifference toward understanding the reasons why security is progressing.

Focus on Positives Instead of Counting Negatives

“A state of safety must also include looking at the things that go well in order to find ways to support and facilitate them.”

—Erik Hollnagel, Safety-II in Practice5

The number of servers, endpoints, accounts, networks, and other components is growing rapidly. Whether you attribute this to the growth in software, cloud-based services, microservices, IoT devices, consumerization of technology, etc. or any other logical reason we are now experiencing a vast increase in size, scale, and complexity that we previously had never dealt with before. Unless we begin to change our mindset to a Security Differently mindset we run the risk of drifting further into failure. Practices like focusing on positives versus counting negatives can shift the culture and mindsets within security teams to look for what is working well so they can better inform the rest of the organization on how to improve. Additionally, counting the negatives will soon surpass our human ability to cognitively as well as physically process that information. Consider for example the number of alerts and threat intelligence events that are overwhelming just about every security operations team across the globe. It’s like turning on a fire hose for someone dying of thirst. To make matters worse, the number of false positives has only gone up along with the number of alerts. We’re so consumed with what’s going wrong, that we aren’t considering the idea of observing what might be going right.

Example Security Negatives we focus on:

- Unpatched Software

- Login Failures

- Security Log Events

- Email Phishing Incidents

- Unauthorized Software

- Security Policy Violations

The current practices of tracking, counting, and measuring the presence of negative events such as email phishing incidents, software defects created, and policy violations doesn’t help us understand what is going right, or how. In fact, most organizations cannot explain what they are doing right without explaining it in terms of what did or did not go wrong. Safety and resilience engineering researcher Erik Hollnagel refers to this as “Safety-I” thinking, which operates under the same incorrect assumptions as the safety world Dekker criticizes: we assume that when we wrote the policy, designed the system, and implemented the relevant security measures that we had an accurate understanding of how the entire system behaved to begin with. This assumption continues to further dilute our ability to build better security by empowering a top-down culture of telling people what they should and should not do based on knowing all the options. In contrast, a Safety-II approach focuses on how to best develop an organization’s potential for adaptive capacity—the way it responds, monitors, learns, and anticipates.

For example, as a standard practice in the industry we count negatives such as System Vulnerabilities. Industry regulatory standards such as NIST, PCI, or HITRUST recommend that security practitioners should track system vulnerabilities and classify them based upon risk level. If all we focus on is the tracking of the negative outcomes here, we are missing opportunities to gain proactive improvements by studying the systems that are perceived to have no vulnerabilities.

There are skills, methods, tools, and practices that high performing systems have which make them better at resolving system vulnerabilities than other system teams. They are adaptive instead of brittle. That knowledge, tool, method, or practice could be shared with other engineers and teams to proactively improve other team’s ability to practice the same level of good security hygiene. In this case, we are seeking to improve the competency and common sense of the engineers doing the actual work versus reactive tactics as a result of only tracking the negative outcomes.

Repeatedly during security incidents, we are forced to rely on shallow, low quality event data that is unreadable and difficult to decipher what went wrong. We often don’t become aware of this situation until we are forced to rely on this shallow data during incidents. Further adding to the mix of challenges, the cognitive load for incident responders is concentrated on getting the system back to an operational state so that the company stops hemorrhaging money. Practices such as Chaos Engineering—the discipline of experimenting on a distributed system in order to build confidence in the system’s capability to withstand turbulent conditions in production—allow us to proactively improve upon the quality of log and monitoring information before we are forced to rely on it to resolve security incidents. In the event we discovered gaps in coverage or scanning we discovered them proactively before they caused customer pain or productivity loss. Over time this type of feedback helps foster increased levels of confidence, competence, and team intuition.

Security Differently is about educating the broader industry about these concepts and the challenges they are presenting to our ability to be effective at system security. Our industry is beginning to recognize that learning from other domains like safety can make a difference. There are decades of lessons learned, proven practices, and research to be learned from in the fields of Nuclear Engineering, Safety Engineering, Resilience Engineering, Medicine, and Cognitive Science that could help the cyber security industry turn the tide on cybercrime. If we don’t continue to evolve our practices and learn from others, we will continue to see breaches, outages, and headlines exponentially climb.

- Drift into Failure by Sidney Dekker

- How Complex Systems Fail by Richard Cook

- Field Guide to Understanding Human-Error by Sidney Dekker (See also: Behind Human Error by David Woods, Sidney Dekker, Richard Cook, Leile Johannesen, and Nadine Sarter)

- The Theory of Graceful Extensibility by David Woods

- Safety-II in Practice by Erik Hollnagel

Aaron Rinehart

Aaron Rinehart has been expanding the possibilities of chaos engineering in its application to other safety-critical portions of the IT domain notably cybersecurity. He began pioneering the application of security in chaos engineering during his tenure as the Chief Security Architect at the largest private healthcare company in the world, UnitedHealth Group (UHG). While at UHG Rinehart released ChaoSlingr, one of the first open-source software releases focused on using chaos engineering in cybersecurity to build more resilient systems. Rinehart recently founded a chaos engineering startup called Verica with Casey Rosenthal from Netflix and is a frequent author, consultant, and speaker in the space.