Four Prerequisites for Chaos Engineering

Ed note: This post presumes you have some familiarity with Chaos Engineering, and are considering whether you can start experimenting with it at your organization. If you’re not familiar with Chaos Engineering, here’s a great post to get you up to speed.

Chaos Engineering is often characterized as “breaking things in production,” which lends it an air of something only feasible for elite or sophisticated organizations. In practice, it’s been a key element in digital transformation from the ground up for a number of companies ranging from pre-streaming Netflix to organizations in highly regulated industries like healthcare and financial services. In this post, I’ll cover the basic things you need in place to be prepared for Chaos Engineering on your team, including a pragmatic way to dip a toe in the water.

Three Common Myths About Chaos Engineering

Before diving into the prerequisites, I want to dispel three myths I often hear about Chaos Engineering, which can pre-empt most people’s efforts to get started.

Myth 1: Chaos Engineering is an Advanced Capability

This myth purports that you have to be at a near-wizard level of sophistication with your systems in order to consider undertaking Chaos Engineering. It’s often invoked in concert with Netflix and other large companies like Google, the conclusion being that you must be at Netflix or Google-level sophistication (and therefore a high degree of reliability) in order to run experiments on your systems. But the reality is that Netflix is where it is now because of Chaos Engineering, which was born out of Netflix’s transformation from the data center to the cloud. At the time, Netflix didn’t have a stellar reputation for their availability—they have that reputation now by experimenting (safely!) with their systems and putting what they learned into practice, in turn helping them build more stable systems. Given that history, you also could start where you are now, and get to a reliability level like Netflix someday.

You may be thinking, “Okay but for s/company/bank/g or other highly regulated industries where serious money or even lives are on the line, how can Chaos Engineering be safe in that case?” The consequences of outages for such companies is certainly more serious than Netflix going down, but that doesn’t mean these companies aren’t having outages. If anything, such organizations have even more reason to better understand their complex systems as the consequences of their outages are so much higher. Organizations like Capital One, Starling Bank, National Australia Bank, Cerner Corporation, and United Healthcare also implemented Chaos Engineering as part of their transformation to the cloud while better understanding the complexity of the systems that they’re building.

In 2016, Starling Bank implemented their own simple Chaos Engineering tool, at the same time that they implemented their core banking system. Former CTO of Starling, Greg Hawkins, lays out the clear benefits they saw as a result:

“From this moment onward, forever, you know your system is not vulnerable to a certain class of problems. See how powerful those choices can be? Especially if you made them as early as Starling Bank did.

When people (technology and business alike) understand what is going on with server failures, you have an important step up towards explaining, normalising and embedding more interesting experimentation.”

Finding Your Safety Boundaries

The main misconception underpinning this first myth is the notion that chaos engineering is “breaking things.” This notion itself is broken: Chaos Engineering is fundamentally a practice of experimentation. It helps you understand the safety boundaries of your system and when you’re drifting towards those boundaries, and are going to fall off a cliff once you cross them. Sidney Dekker covers this in detail in his book Drift Into Failure:

“Adaptive responses to local knowledge and information throughout the complex system can become an adaptive, cumulative response of the entire system—a set of responses that can be seen as a slow but steady drift into failure.”

These adaptive responses come from people—the operators at the sharp end of the wedge—and are generally in response to:

- Uncertainty or competition

- Balancing resource scarcity or cost pressures

- Multiple conflicting goals (internal and external)

These constraints are resolved, reconciled, or balanced by numerous small and large trade-offs by people throughout the system. Those trade-offs start to push your systems in the directions of your safety boundaries, but you rarely see that it’s happening. In his book, Dekker talks about how such trade-offs and drift can lead to dangerous events like the Challenger explosion or BP Deepwater Horizon explosion.

Chaos Engineering helps you start to know when you’re tiptoeing towards that cliff, instead of unwittingly falling off of it, and having an outage or incident.

“Chaos Engineering is a practice of experimentation that helps you better understand the safety boundaries of your system, and detect when you are drifting towards those boundaries.”

Myth 2: Things Are Chaotic Enough Already, Why Make Them Worse?

Chaos Engineering isn’t adding chaos to your systems—it’s seeing the chaos that already exists in your systems. This particular myth is in part driven by the perception that Chaos Engineering is a form of new, cutting edge technology. Instead, however, it is borrowing from a long tradition of using experimentation to confirm or refute hypotheses you form about your system. Every experiment begins with a hypothesis, which explains what you expect to happen when you introduce change into your system. For availability experiments, the form of such a hypothesis could be:

Under _________ circumstances, customers still can buy our product.

The blank is filled in with variables that ideally reflect real-world events and are focused on customer experience or KPIs that track to clear business value (versus what engineers might rightfully find interesting). Well-designed experiments that verify (or refute) real-world scenarios, and limit blast radius to keep things safe, will tell you more about the inherent chaos and complexity of your systems, without making them worse.

Myth 3: You Need Observability Before Starting Chaos Engineering

At Verica, we’re big fans of observability—it enables you to inspect and understand your technology stack and is an excellent follow-on to basic monitoring. But since Chaos Engineering focuses on verifying the output of systems and their impact on customers or users, being able to inspect or understand the internal technical state of your system isn’t a requirement. Additionally, observability can tell you where your systems are now, but not where they might be in the future.

So what do you need to get started with Chaos Engineering? Let’s get into it.

Chaos Engineering Prerequisites

I’ll walk through each of these below in more detail, but here’s a handy list:

- Instrumentation to Detect Changes

- Social Awareness

- Expectations About Hypotheses

- Alignment to Respond

Since Chaos Engineering is a practice grounded in experimentation, you need to be able to detect differences between a control group and an experimental group. If you don’t have the instrumentation in place to detect any differences, such as degradations of service, then you don’t have any way of understanding whether your experiment is impacting anything. Putting into place simple, basic kinds of logging and metrics (things that most teams likely already have, thankfully!) will allow you to understand whether your experimental variable had an effect or not.

It’s important to get buy-in from anyone who either works on or is impacted by any systems that might be involved in your Chaos Engineering experiments. It’s easy to be tempted to sneak a few preliminary experiments in, so you can have the results to show everyone how valuable Chaos Engineering is. But if there is any kind of an impact, even outside of production systems, you’re only going to create animosity and resistance to any future Chaos Engineering efforts that you want to undertake. Take the time to build that consensus before you start experimenting. The good news is you don’t need your own Raft implementation for this kind of consensus—you just need to talk to people.

As I mentioned in an earlier section, Chaos Engineering presumes that you are designing experiments which have a hypothesis about what’s going to happen in real-world scenarios. In particular, those hypotheses should focus on system behaviors you already know well—as system operators, you’re at the sharp end of the wedge, and know how those systems work. So you should be the best people to come up with realistic hypotheses about what is going to happen when you change something in a given system. What you don’t want to do is create a wildly unrealistic situation that you know will fail out of the gate. You want to have an honest expectation that your hypothesis will be upheld. If, for example, you are in a position where you have specific SLOs, you can have a hypothesis along the lines of, “This service will meet all SLOs, even under conditions of high latency within the data layer.” If you disprove that hypothesis, then you have learned something about your system that you can go explore and work to resolve.

There’s one other element to this prerequisite. Given that Chaos Engineering is focused on gaining new knowledge about your system, an experiment that only confirms something you know is broken doesn’t give you any new knowledge. Fix what you know is broken in a particular system before you introduce Chaos Engineering into that system. If you know that you don’t have the right security controls on your log-in page, fix that first. This doesn’t mean fixing every broken thing—that’s the worst prerequisite to Chaos Engineering ever. But as you choose areas to start experimenting in, address the known broken things on a system-by-system basis. Start small, and work your way to bigger problems and systems as you go.

“To properly support Chaos Engineering, a solid cultural infrastructure is as important as your technical infrastructure.”

This is where Chaos Engineering projects can die on the vine: you run an experiment, learn something you should deal with, and everyone’s too busy with all their daily work to do anything about it. But there’s one good bit of news about this prerequisite: if you approach it wisely, you can knock this one out with Prerequisite #1. You still need to treat it as a separate requirement, because it comes later and you won’t know what your experimental results will be up front. As you get buy-in to conduct Chaos Engineering experiments, ensure that everyone agrees on then doing the work to fix whatever you might find.

Again, it’s the people who understand your systems the best that are going to be the most successful at reasoning about the results of experiments and determining the appropriate changes to make. Dr. Richard Cook coined the term “Above the Line” processing when it comes to this aspect of how we manage complex systems.

Below the line are the things practitioners typically think about when they discuss their systems: code repositories, monitoring systems, APIs, SaaS tools, test suites. Above the line are the people, processes, and organizations that build, shape, maintain, and tend to the technical aspects below the line. And most importantly, no single person can be responsible for all of it: the work is collaborative, ongoing, and challenging. Ideally, Chaos Engineering reveals hidden unknown aspects of your system, and you will need that above the line processing to react accordingly. I like to refer to this as your cultural infrastructure. To properly support Chaos Engineering, a solid cultural infrastructure is as important as your technical infrastructure.

Getting Started with Chaos Engineering: Game Days

Game days are a great way to get started with Chaos Engineering at your organization. Most engineering teams are generally familiar with the concept, and you don’t have to make it overly complicated. Most importantly, you don’t have to do them in production! More sophisticated programs end up operating in production, but that really is an advanced principle, and definitely not a prerequisite. You can get critical insights from running experiments safely in a staging or other test environment.

The TL;DR is:

- Get the right people in a room who are responsible for a system or set of systems.

- Shut off a component where you’d expect the system to be robust enough to tolerate losing it.

- Record the learning outcomes and bring the component back online.

New Relic has a helpful, detailed post on how to run game days.

You’re Not Alone

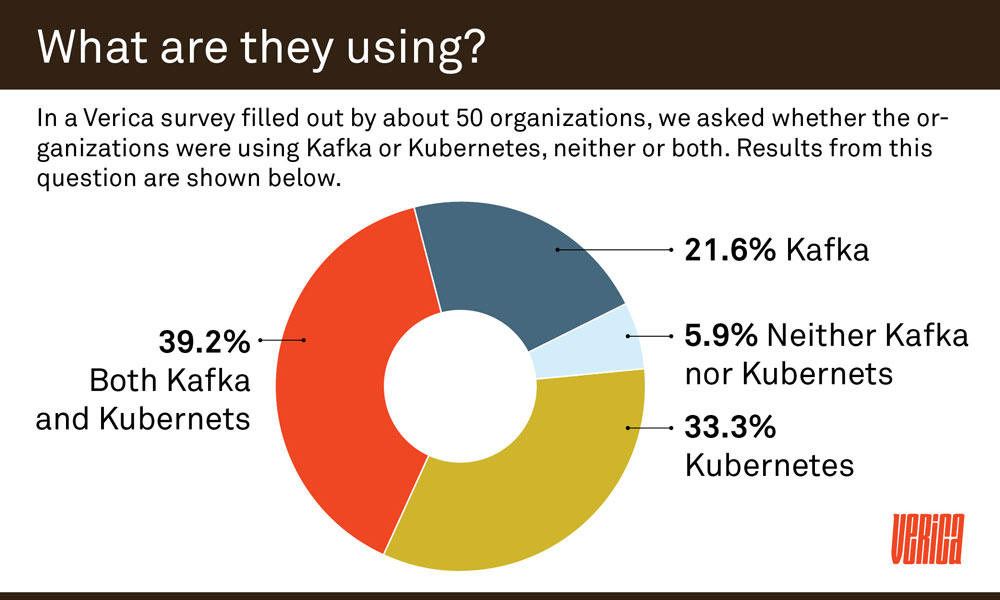

At Verica, we’re currently focused on building products for Kubernetes and Kafka—two technologies that are seeing huge amounts of adoption, a lot of production usage, and they’re also very complex. We spoke with people from about 50 organizations who are using Kafka and/or Kubernetes, to better understand their usage scenarios and experience with running production systems on top of these technologies.

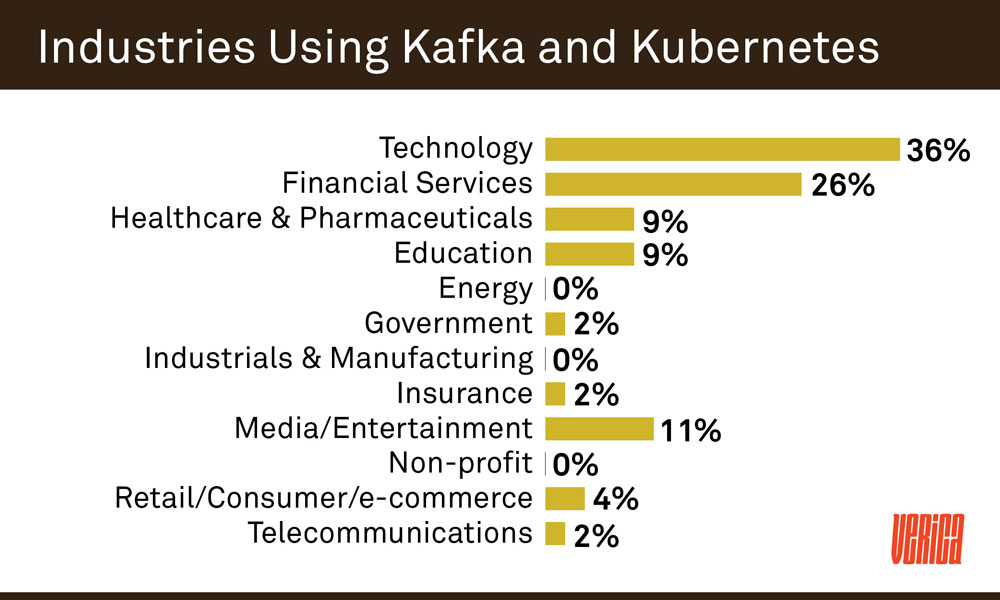

These organizations are running Kafka and Kubernetes in production extensively in some cases, and across a wide range industries. It’s not surprising that we would see a lot of technology companies, but the second biggest industry we talked to were people in financial services.

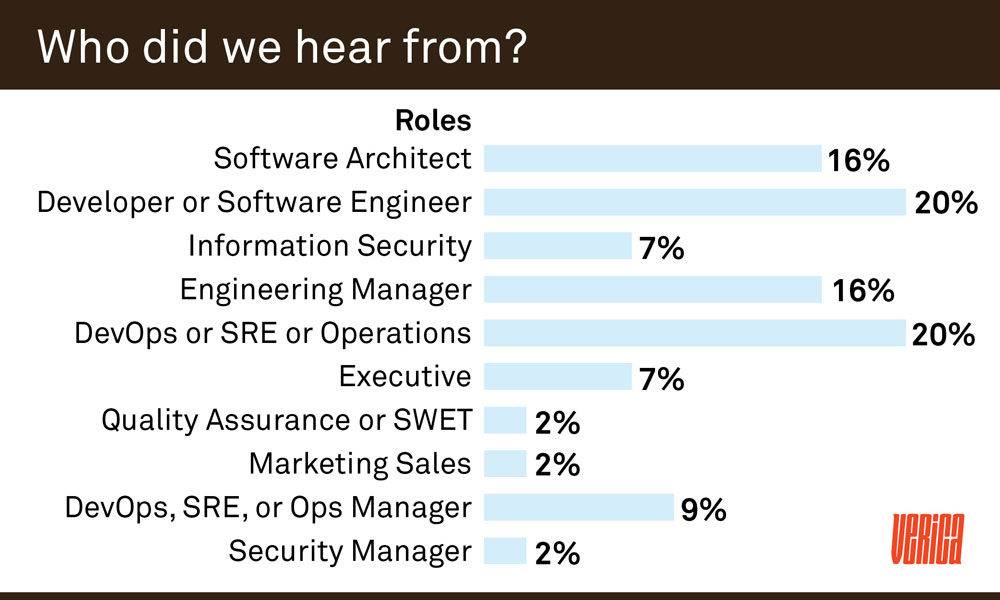

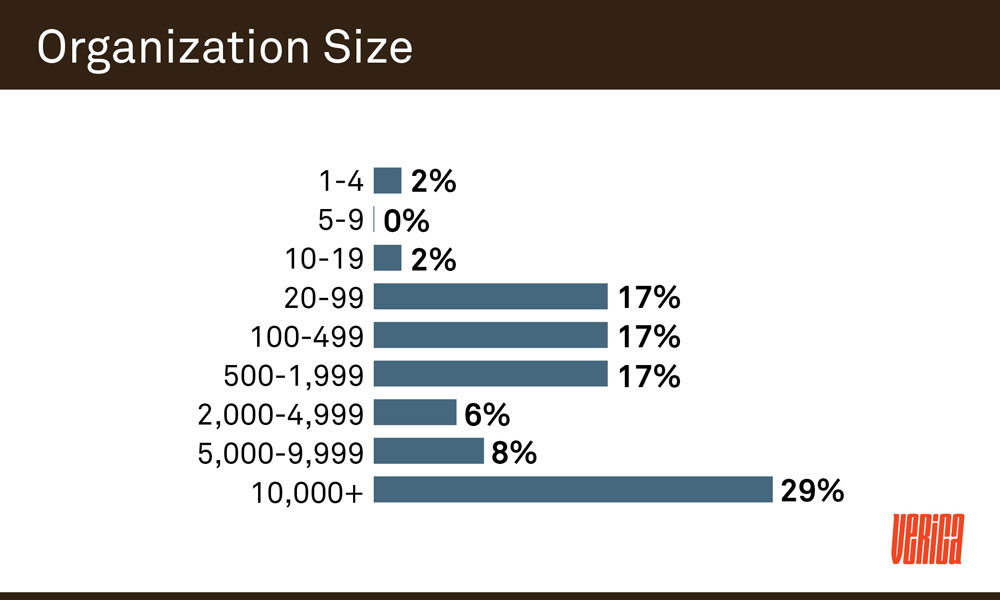

We expected to hear from mostly DevOps practitioners and SREs, along with software engineers. Which we did, but we also heard from architects, information security pros, engineering managers, and executives across nearly every size of organization as well.

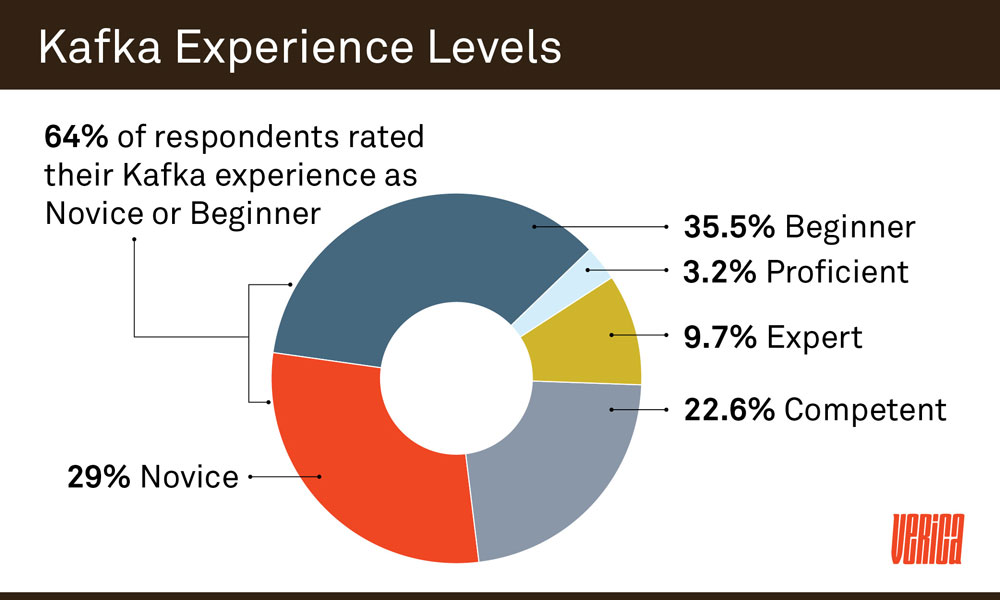

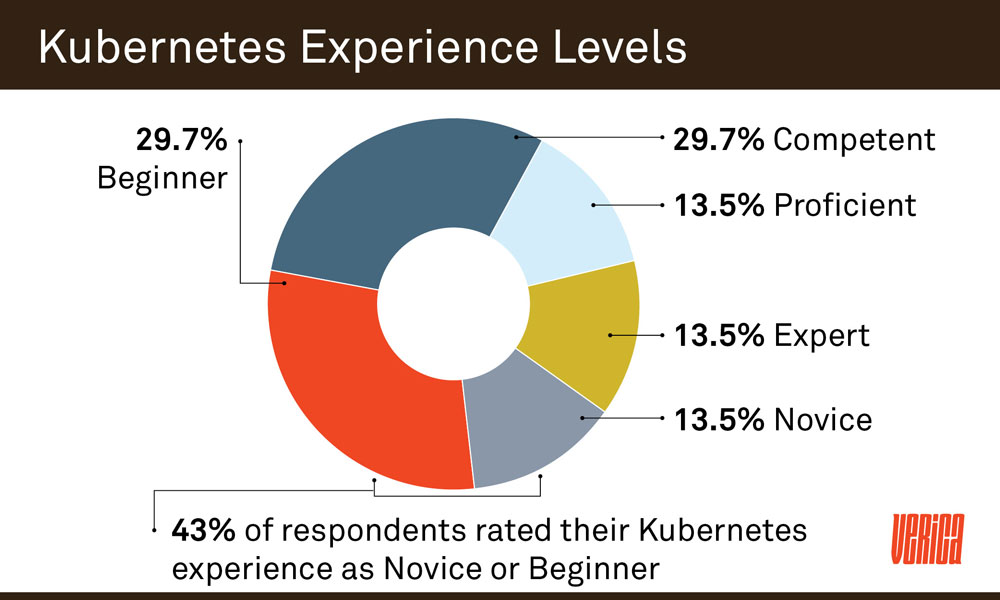

Things got interesting when we asked people to assess their own level of experience with both Kafka or Kubernetes. Almost two thirds of people running Kafka rated themselves as novice or beginner users, and barely more than half of Kubernetes users rated themselves above those levels.

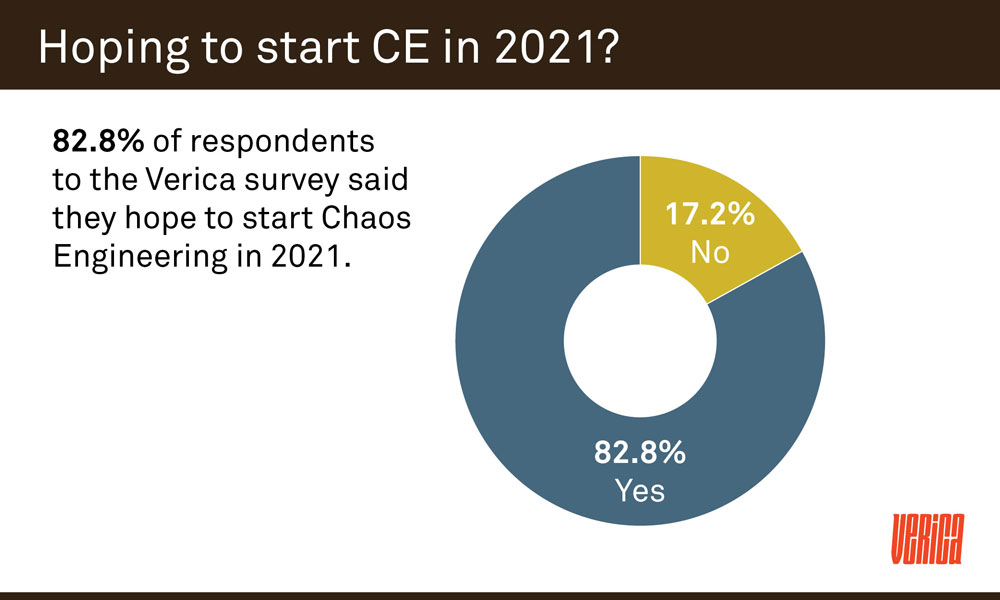

And despite the fact that the majority of people we talked to aren’t in the “Proficient/Expert” category, 83% of them are hoping to start some kind of chaos engineering program this year.

These people are not wizard level when it comes to Kafka or Kubernetes. Yet they are pushing out complex systems in production and realizing that they can gain that same kind of confidence in their systems that Netflix built over time by starting earlier with Chaos Engineering.

Resources

- How Complex Systems Fail by Richard Cook

- Drift Into Failure by Sidney Dekker

- Chaos Engineering by Casey Rosenthal and Nora Jones (get a free copy!)

- Chaos Community Broadcast: Interviews with experts and pioneers in the space

Courtney Nash

Courtney Nash is a researcher focused on system safety and failures in complex sociotechnical systems. An erstwhile cognitive neuroscientist, she has always been fascinated by how people learn, and the ways memory influences how they solve problems. Over the past two decades, she’s held a variety of editorial, program management, research, and management roles at Holloway, Fastly, O’Reilly Media, Microsoft, and Amazon. She lives in the mountains where she skis, rides bikes, and herds dogs and kids.

If you liked this article, we’d love to schedule some time

to talk with you about the Verica Continuous Verification Platform

and how it can help your business.